Autonomous AI agents need to handle tasks responsibly, accurately, and swiftly in multiple languages, addressing potentially millions of specifications across hundreds of thousands of products.

Key Takeaways:

- A responsible AI framework for Generative AI spans designing, coding, deployment, and operation.

- For each element of the framework, we describe the steps required as well as the challenges and success factors.

- We draw upon lessons from implementing this framework, including the deployment of a GenAI agent that supports queries from more than 20,000 customers daily.

Generative AI agents are rapidly transforming customer support across various industries, enhancing the user experience by catering to diverse needs with unprecedented efficiency. Initially, these AI-driven solutions served as a competitive advantage, streamlining complex processes and providing round-the-clock customer service. However, as technology advances, the use of generative AI is evolving from a differentiator to a necessity, becoming a critical component for maintaining competitiveness and achieving productivity goals while meeting customer expectations.

Despite their potential, the deployment of Generative AI agents is not without its challenges. High-profile mistakes, such as a car dealership’s chatbot mistakenly offering a full-size vehicle for just $1, underscore the complexities of implementing these systems effectively. Both private companies and public sector entities face the risk of Generative AI agents miscommunicating policies, which could lead to significant operational and reputational damage.

To unlock the full potential of generative AI while minimizing associated risks, organizations must adopt the principles of Responsible AI (RAI). GenAI agents must operate with responsibility, accuracy, and speed across multiple languages and millions of product specifications. The challenges are further compounded by the need to manage frequent product updates and dynamic pricing strategies. Given the complexity of their operating environment, these agents must ensure proficiency (consistently delivering intended outcomes), safety (preventing harmful or inappropriate content), fairness (ensuring equal quality of service and access), security (protecting sensitive data from malicious threats), and compliance (adhering to all relevant laws, policies, and ethical standards).

To address these challenges, a robust framework for implementing RAI across the lifecycle of GenAI agents has been developed. This approach starts with the initial development phase, followed by rigorous end-to-end testing before any new feature release. Once deployed, continuous monitoring and testing are crucial to adapt to changes within the technological ecosystem, ensuring that the Generative AI agents maintain optimal performance over time.

Experience deploying this framework at global industrial goods companies highlights both the success factors and challenges involved. For instance, one implementation involved a Generative AI agent that handles over 20,000 sales queries daily, demonstrating the potential and pitfalls of integrating such technology at scale.

Enhancing Trust and Compliance in GenAI Systems

Responsible AI (RAI) is a comprehensive framework developed by BCG and is designed to ensure that AI systems deliver the intended benefits while aligning with corporate values. By carefully designing, coding, testing, deploying, and monitoring AI systems, organizations can minimize risks and enhance the reliability of these technologies.

The RAI framework is applied by companies to manage AI systems based on principles such as accountability, fairness, interpretability, safety, robustness, privacy, and security. Given the probabilistic nature of AI, there is always a risk of false positives and negatives, making adherence to these principles crucial for promoting transparency and addressing biases that could skew outcomes.

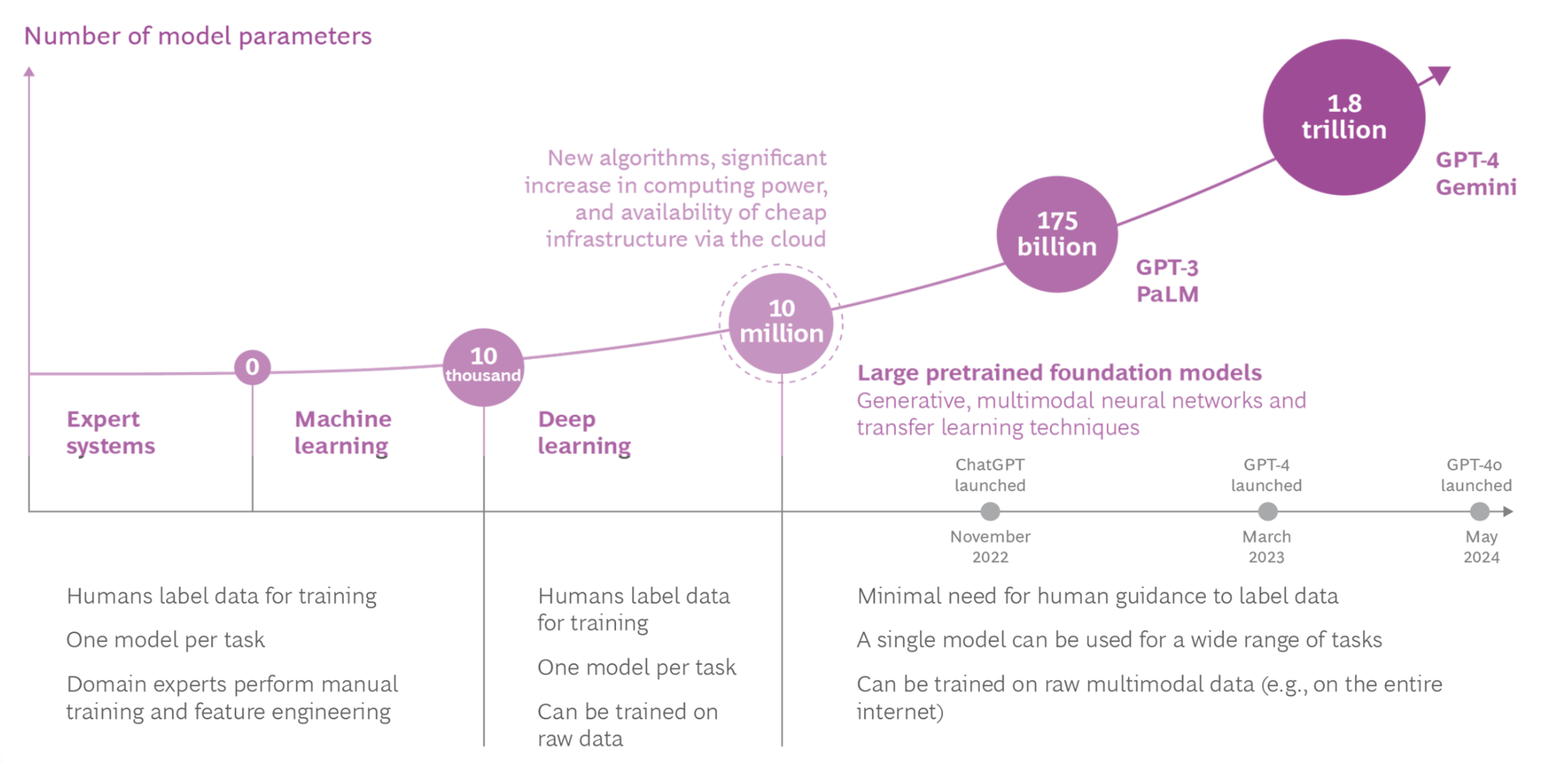

The responsible use of AI has long been a topic of concern, with well-known incidents involving biased hiring practices, discriminatory lending, and breaches of sensitive corporate and consumer data. These challenges were already significant when dealing with AI models that used relatively few parameters and produced stable, quantitative outputs. The introduction of more sophisticated generative AI (GenAI) technologies has exponentially increased these challenges. The conversational nature of GenAI allows for a wider range of interactions between GenAI-enabled applications and users, raising the stakes for protecting both corporate and consumer information.

AI models have evolved from managing a handful of parameters in traditional machine learning to handling millions, billions, and even trillions in the large language models (LLMs) that power GenAI. These GenAI systems are stochastic, dynamic, and nondeterministic, meaning they may produce different responses to the same queries over time. Their ability to engage in natural language conversations with numerous users simultaneously creates countless opportunities for misuse, including errors, misinformation, offensive language, and intellectual property (IP) concerns.

While lapses in AI systems might appear to be isolated incidents, their consequences can be significant, leading to customer alienation, brand damage, regulatory violations, and financial losses. The risks are particularly pronounced in conversational GenAI agents, such as chatbots, where errors can result in misleading statements, incorrect commitments, or flawed transactions directly affecting customers.

Implementing an RAI framework throughout the entire application lifecycle is essential for building trustworthy GenAI applications. This approach helps organizations govern their data, protect intellectual property, preserve user privacy, and ensure compliance with relevant laws and regulations, thereby safeguarding both their operations and their reputation.

Implementing Responsible AI Across the Generative AI Life Cycle

Design Phase: The journey begins with meticulously mapping out the use cases that the GenAI application will support, paired with a thorough understanding of the associated risks. This involves evaluating the specific requirements needed to ensure that the application operates proficiently, safely, equitably, securely, and in compliance with relevant regulations. Considerations include the types of questions the AI will answer, the necessary data inputs, and how corporate values and AI principles will be integrated into the design. For instance, fairness must be defined in the context of all users and reflected in the AI’s interactions.

To mitigate potential risks, organizations should design technical, process, and policy guardrails. For example, if the company chooses not to allow competitor comparisons, the system can be programmed with a prompt like: “As a sales representative for Steve’s widgets, refrain from comparing our products with those of other companies.” This approach also extends to ensuring data accuracy, such as updating product details for each model year to prevent misinformation. The design phase is also where decisions are made about which prompts and guardrails to implement, ensuring that the AI only supports intended use cases.

Development and Coding: Developing GenAI agents involves challenges beyond traditional software creation. Key aspects include:

• Prompt Engineering: Crafting and fine-tuning prompts is essential for generating accurate and relevant responses. Adjusting the model’s “temperature” can produce either conservative, predictable outputs or more creative, human-like responses. Iterative testing and refinement enhance the system’s overall accuracy.

• Integration: Seamlessly integrating GenAI agents with existing systems is crucial for maintaining consistency and delivering value across the user journey. Utilizing modular APIs and reliable libraries can simplify this process.

• Performance Optimization: Striking a balance between accuracy and response time is vital, especially given the resource-intensive nature of GenAI models. Optimizing prompts and context, along with judiciously determining which tasks benefit most from GenAI, helps mitigate latency issues.

• Scalability: GenAI models must efficiently handle large volumes of requests. Optimizing infrastructure, including leveraging cloud-based solutions, addresses scalability challenges.

Testing and Evaluation: Beyond traditional testing methods like penetration and load tests, GenAI requires specialized testing focused on proficiency, safety, equity, security, and compliance. Given the nondeterministic nature of these systems, it’s impractical to test every possible input and output. Instead, testing should prioritize areas of greatest risk. Comprehensive test suites, developed using data from call logs, chat transcripts, and product teams, ensure high-quality, accurate responses aligned with business objectives. This phase should include both human-based red teaming and automated testing, with an incremental release strategy that incorporates user feedback.

Deployment and Release: The deployment process for GenAI should involve a multistage environment—development, staging, quality assurance, and production—to ensure security and performance. Code begins in a development environment, moves to staging for testing, advances to quality assurance for validation by business stakeholders, and finally transitions to production. This progression ensures that interoperability and security measures are verified, such as rate limiting and secure APIs. Systems should support incremental deployments, allowing quick adaptations based on real-world feedback.

Operation and Monitoring: Ongoing monitoring is critical for GenAI systems, particularly due to the risk of model drift over time and sudden performance changes following updates to foundational models. Continuous monitoring allows for rapid analysis and adjustment of the agent’s behavior, with feedback loops in place to refine guardrails, workflows, and implementation strategies. A multi-tiered monitoring approach ensures that GenAI applications consistently meet the desired standards in real-world scenarios.

Getting Started with Responsible AI for Generative AI

To harness the potential of Generative AI (GenAI) while mitigating associated risks, companies need to take several key actions grounded in Responsible AI (RAI) principles:

Establish a strong foundation: Begin by defining a clear development roadmap for your GenAI applications, identifying the key risks expected at each stage of release. Use this understanding to implement the necessary guardrails, prompts, and security measures from the outset, ensuring that your AI systems are aligned with RAI principles.

Adopt new best practices: Develop and implement a comprehensive RAI-based testing and evaluation framework specifically tailored for GenAI. This should include a suite of best practices designed to build trust among stakeholders as development progresses. Additionally, integrate a continuous monitoring framework with existing tools, allowing teams to proactively manage and refine guardrails and prompts, ensuring ongoing compliance with RAI standards.

Integrate best practices across all processes: Ensure that RAI principles are embedded in every aspect of your GenAI initiatives. This includes setting up the necessary infrastructure and tools to support RAI-compliant deployment of new applications and features. Provide your organization with guidance on establishing continuous governance processes and mitigating the risks associated with GenAI applications using RAI-based frameworks.

Prepare a response plan: Develop a detailed response plan that outlines the steps to be taken if a system deviation or failure occurs. This plan should specify the roles and responsibilities of each team, ensuring that they can respond swiftly and effectively to minimize any potential impact.

Generative AI offers immense value, but to fully capitalize on its potential, companies must evolve their development practices to maintain control over this powerful technology. By implementing a tailored RAI framework, companies can ensure that their GenAI-enabled applications deliver value in a responsible, accurate, and secure manner. Mobilizing the right skills and tools is crucial for leveraging data appropriately and providing a reliable and effective customer experience.

It's never been easier to add Generative AI to your products. Explore how RAI can benefit your business and how can our AI experts accelerate your development. Request a free consultation and discover our pool of talents that make your journey to AI easier.

.jpg)